• 10/04:

Being able to gather all the depth, camera and skeleton data from Processing and send it to Max or decide on [dp.kinect] and buy the object. Polish and simplify the interface created for the midterms. Add a simple video patch to test computer performance.

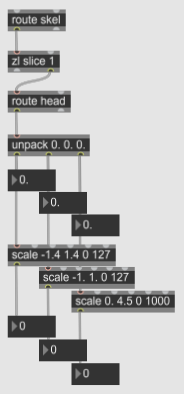

For my midterms, I used the trial version of [dp.kinect2], which is very reliable and easy to use. What is happening now is that I am routing the skeleton data from [dp.kinect2], scaling each joint from its minimum and maximum values — which I discovered by moving to each extreme sides of the frame and inputting the values by hand — to midi values. Here is what this looks like in Max:

After, I developed a few comparison objects so that the mapping of the joints would be less obvious. These objects are:

[dist1], [dist2] and [dist3] — compares the distance of two points in one, two or three dimensions ([dist1] is in the image below, the other two are just extensions of this operation);

[monitorChange] — outputs updated value only when the input value change goes over a certain threshold;

With the output values, I sent midi messages to Ableton Live using two other objects that I created to facilitate this process. They were:

[midi4live] — sends midi messages to a midi track in Ableton;

[seq4live] — a step sequencer that uses randomness to choose the steps whenever triggered by the performer’s movements, sending midi messages to control beats in Ableton Live;

That was all working fine, but I was still very unsure about dedicating time and money to a discontinued product, so I still wanted to try to gather the Kinect data using Processing, since I was going to learn a lot from it, and possibly have more understanding of what is happening behind the curtains.

In searching Kinect libraries for Processing, I looked at Shiffman’s Kinect for Processing tutorial, which doesn’t cover skeleton tracking, but links to Thomas Lengling’s KinectPV2 library for Processing. This library seemed to cover most functionalities that I need, so I tried to implement it and see if I could get the same data and with the same reliability of [dp.kinect].

Starting with the library’s examples, I managed to get some skeleton tracking, even though it was definitely not as stable as [dp.kinect].

I managed to get the X and Y data of each joints, but I still haven’t figured out how the Z works in this library. Unfortunately, I only noticed that when I had the clean OSC readings in Max. Also, I haven’t yet figured out how to send the positions of each joint in a loop, without assigning them by hand. In the screenshot below, all joints correspond to the value of the right hand. I believe I’d need to turn the string in the jointName array into a variable name, and I couldn’t find a way to do that in Processing yet. Still, I was able to create a series of OSC messages corresponding to each joint, using oscP5 library, and receiving them in Max.

I managed to get the X and Y data of each joints, but I still haven’t figured out how the Z works in this library. Unfortunately, I only noticed that when I had the clean OSC readings in Max. Also, I haven’t yet figured out how to send the positions of each joint in a loop, without assigning them by hand. In the screenshot below, all joints correspond to the value of the right hand. I believe I’d need to turn the string in the jointName array into a variable name, and I couldn’t find a way to do that in Processing yet. Still, I was able to create a series of OSC messages corresponding to each joint, using oscP5 library, and receiving them in Max.

I didn’t finish all my goals for this week, but I am very happy with all that I learned about Kinect in Processing and OSC, so I believe that this time was very important for this project.